Literature Review: Scaling Monosemanticity – Extracting Interpretable Features from Claude 3 Sonnet

This report by Anthropic’s interpretability team reports on scaling sparse autoencoders (SAEs) to Claude 3 Sonnet. The authors successfully extract millions of interpretable features, showing that even very large models contain directions in activation space that can be decomposed into semantically meaningful, often monosemantic, representations.

Key Insights

-

Features are more interpretable than neurons.

Across systematic comparisons, features learned by SAEs were more semantically consistent and specific than raw neurons. This validates dictionary learning as a superior approach for mechanistic interpretability. -

Concept coverage grows with feature capacity.

As SAE dictionary size increases, more rare and fine-grained concepts emerge (i.e., San Francisco neighborhoods splitting into subfeatures). Frequency in training data strongly predicts which concepts have dedicated features. -

Causal steering is possible.

By clamping features, the authors induce predictable changes in model behavior (i.e., hallucinating code errors, praising sycophantically, even circumventing safety fine-tuning). This suggests that features not only correlate with but also drive model outputs. -

Safety-relevant features are discoverable.

The team uncovers features related to unsafe code, deception, manipulation, and harmful content. While they caution against overinterpretation, this represents a concrete step toward identifying and potentially controlling risk-related internal mechanisms.

Example

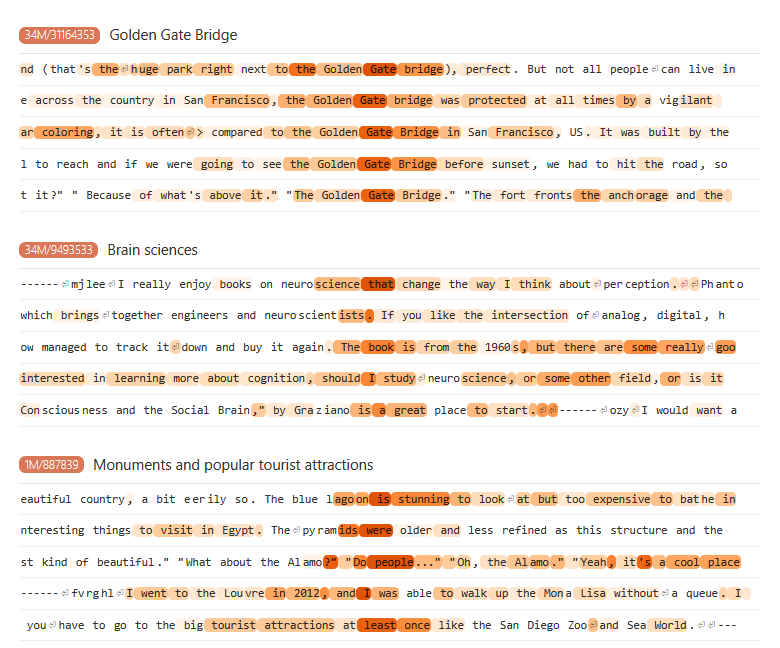

One illustrative case is the “Golden Gate Bridge” feature, which fires robustly on mentions of the landmark in multiple languages and contexts. Steering experiments show that clamping this feature induces the model to output bridge-related completions even in unrelated contexts. Interestingly, weaker activations extend to associated concepts like tourism and vacations, showing how feature space reflects both central and peripheral meanings.

Figure: A feature for the Golden Gate Bridge generalizes across languages and activates on semantically related concepts such as tourism and monuments. Steering the feature modifies model behavior in predictable ways.

Ratings

Novelty: 4/5

While dictionary learning for interpretability was introduced in prior work, this report is the first to demonstrate its feasibility and richness at scale in a modern production LLM. The safety-relevant features and causal steering examples elevate its importance.

Clarity: 4/5

The writing is strong, with detailed case studies and interactive visualizations. However, the interpretability of more abstract features is less rigorously validated, leaving some ambiguity about robustness.

Personal Perspective

This report was quite interesting for me and was the inspiration for a paper that I had submitted for review just yesterday. I think the ideas presented has a lot of potential for safety especially, something similar to a semantic firewall for LLMs. That said, most examples highlight distinct, low-level concepts (landmarks, syntax, bugs). But the harder challenge of capturing multifaceted, high-level abstractions like safety, temporality, or fairness, remains unsolved in this specific report (hint hint). There’s a couple points of concern for me, some of which I address in my own work: even humans struggle to define such concepts consistently, and their representation in superposed feature space is likely fractured and context-dependent. Not only that, the report mentions that for many important representations it may require extreme compute budgets to get the dictionary size that will capture the concept. But either way I think the key open question is whether these features can support reliable, auditable safety guarantees, or if interpretability will remain an exploratory tool rather than a verification standard.

Enjoy Reading This Article?

Here are some more articles you might like to read next: